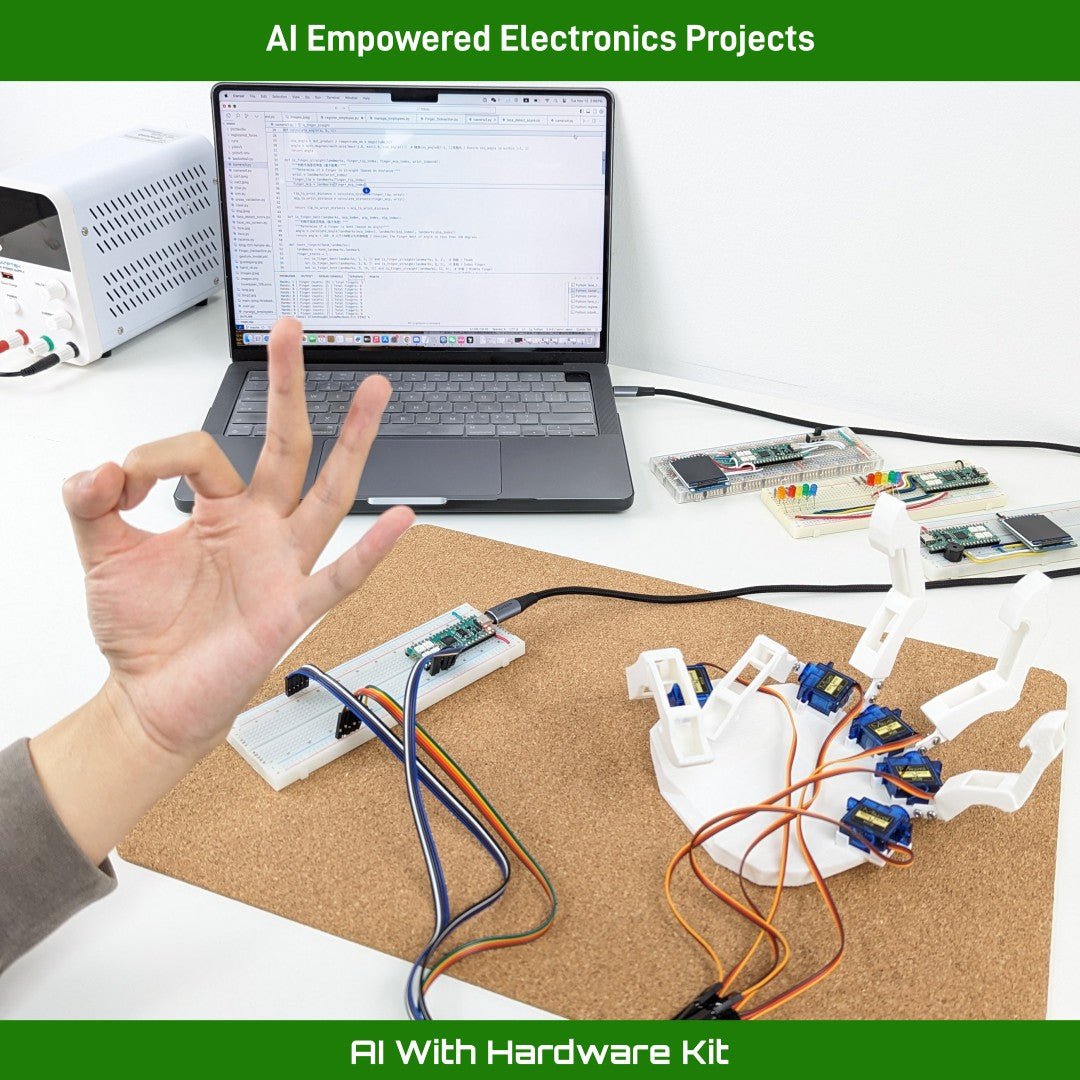

Starting from fundamental AI concepts, this kit guides you through six hands-on projects, from Python-based CNN training to real-time hardware integration using microcontrollers, OpenCV, and PyTorch. You'll build systems that can recognize images, track gestures, and even control robotic hardware.

Who It’s For

Ideal for learners at high‑school or college level aiming to:

- Understand AI through hands-on computer vision projects

- Bridge the gap between software-based ML and embedded systems

- Build real-world systems—like robotic hands or gesture-controlled interfaces

What's Included in the Kit

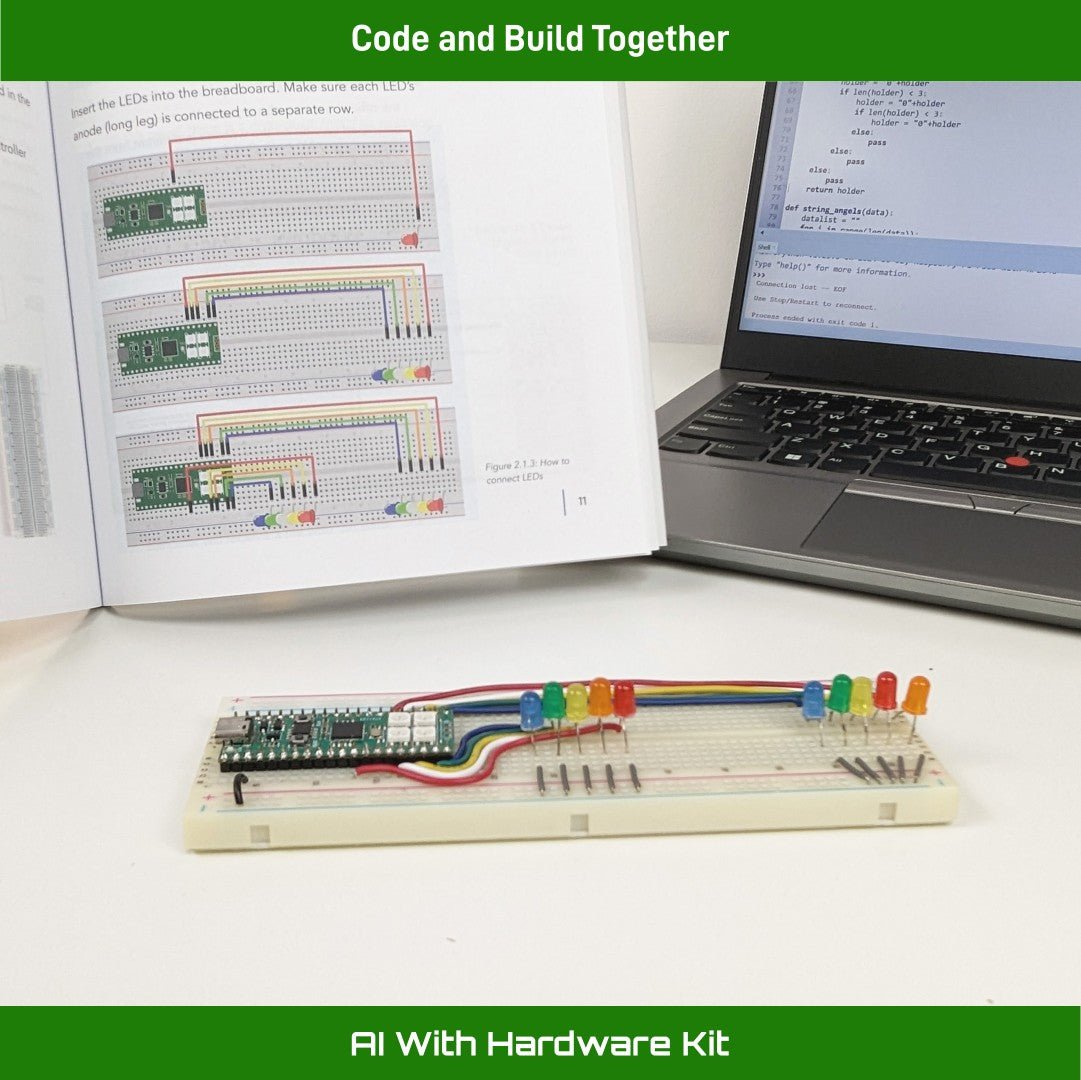

- 200+ page guidebook with 7 chapters

- Permernantly free access of online tutorials and examples

- Python/Jupyter-based tutorial code

- OpenCV, MediaPipe, PyTorch examples

- Microcontroller board (e.g., RP2040 or similar)

- Sensors, LCD display, LED modules, servo motors, wiring accessories

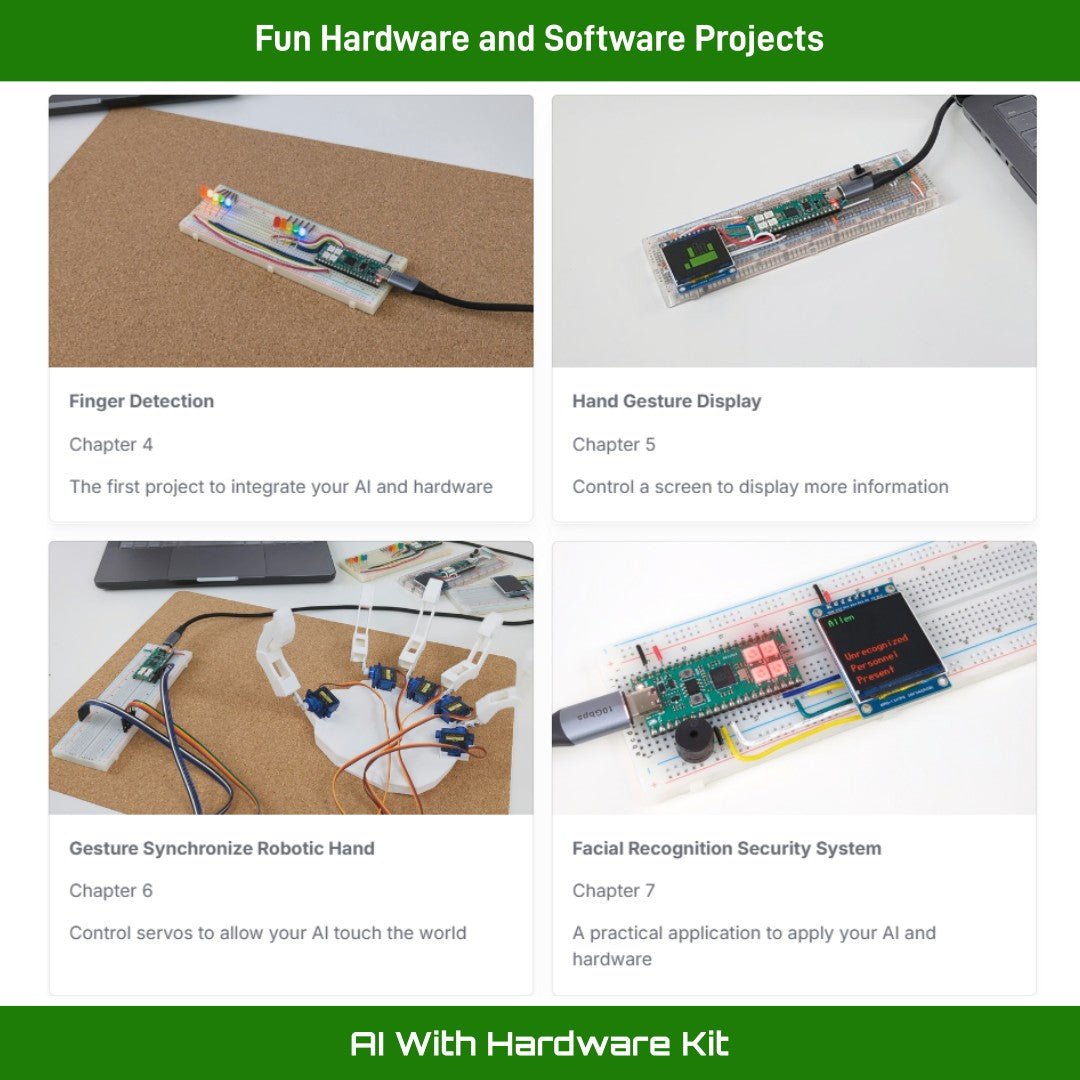

Projects You Can Build & Play

Visit our learning academy or click the links for the video tutorials of each project.

- Linear Regression (Data Prediction)

- Images (Cats and Dogs) Classification

- Finger Detection

- Hand Gesture with LCD Display

- Control a Robotics Hand

- Facial Recognition

For the robotic hand project, you can find additional instruction materials online. Note that the physical part of the "artificial hand" is not included in the kit but the 3D-printed model is avaiable for download. If you don't have a 3D printer avalable, use the plastic rod included in the servo motor for learning purposes.

About the Tutorial

The included color-printed guidebook is written to address the following topics and skillsets that help learners to:

- Learn Python, Pandas, and Matplotlib for data analysis

- Understand machine learning fundamentals like linear regression

- Train convolutional neural networks (CNNs) for image classification

- Build real-time computer vision systems with OpenCV and MediaPipe

- Develop gesture-controlled interfaces and screen interaction

- Assemble a servo-driven robotic hand with AI mimicry

- Explore face detection, face recognition, and multi-device communication

With illustrative figures and examples, the book will walk you through each concept with step-by-step instructions, detailed circuit diagrams, and real-world examples. It’s designed to make technical learning approachable, even if you're new to hardware or coding.

To complement the book, you'll also get access to online learning resources, including code examples, simulation tools, and guided video content — so you can learn at your own pace, wherever you are.

Why Learn with This Kit?

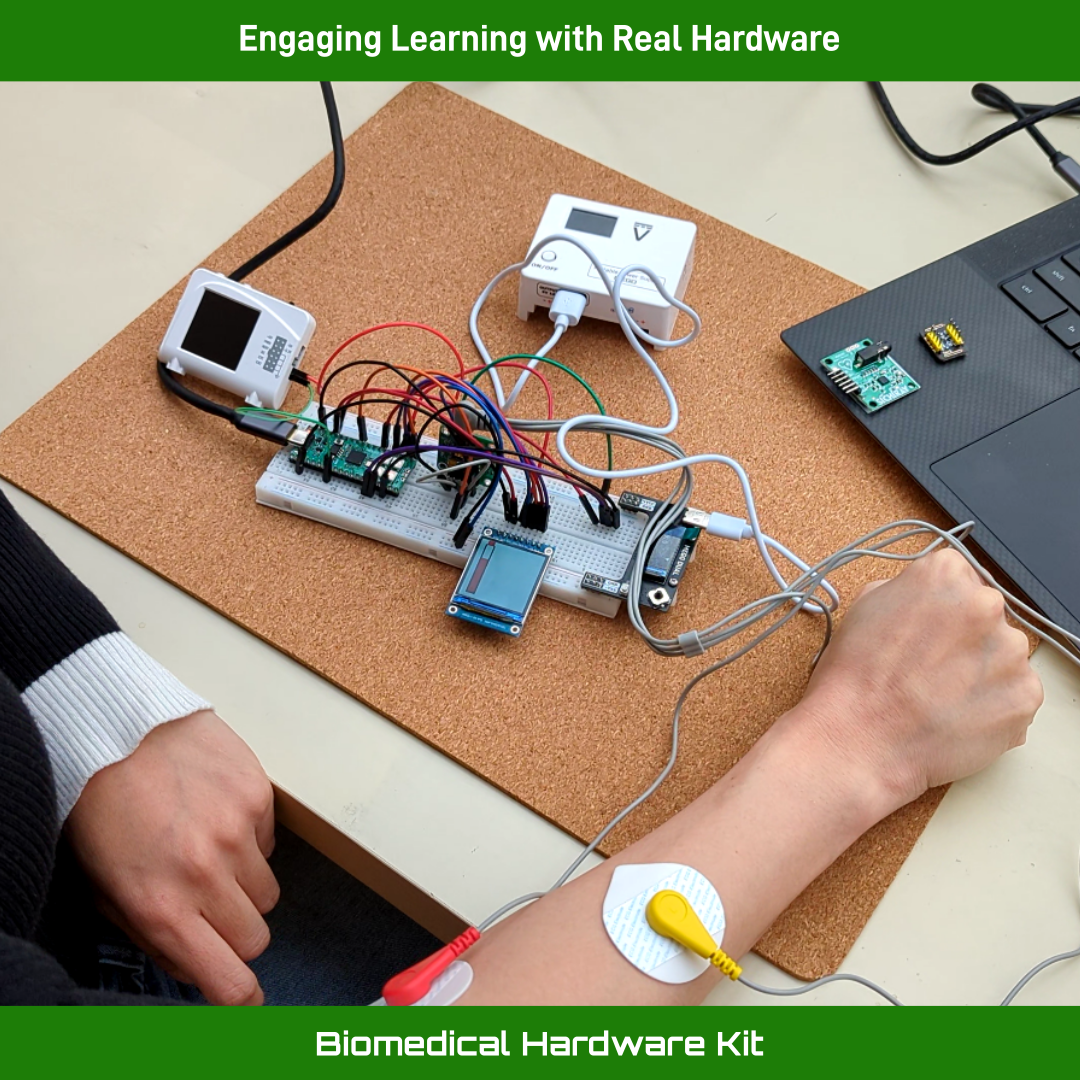

This kit is the perfect starting point for anyone interested in the intersection of AI and hardware. Designed as a foundational part of our AI series, it builds up essential skills in Python, machine learning, and computer vision — then connects them directly to real-world applications using sensors, microcontrollers, and embedded projects.