Artificial Intelligence Learning Kit: Hands-on Guide to Fine-Tuning Neural Network Models in Computer Vision

Based on our prior experience, we realized that a single project alone cannot possibly cover the breadth of knowledge needed to truly understand AI and hardware integration. As such, we expanded our approach to include a total of six projects organized into a seven-chapter book. The difficulty level gradually increases: the first two projects focus on simple, software-based tasks to introduce fundamental AI concepts, tools, and environment setup, while the remaining four projects add hardware interactions. These cover a range of components—something that lights up, something that makes sound, something that displays information, and even a robotic hand that moves.

This kit isn’t just about learning concepts; it’s about mastering the practical application of AI principles through projects that integrate hardware and software. With an emphasis on project-based learning, the kit includes structured lessons and exercises to:

- Build AI-powered systems

- Program microcontrollers

- Interface with real-world hardware like sensors, LCDs, and robotic parts

The AI Learning Kit introduces students to Python programming, machine learning basics, and AI tools like OpenCV, MediaPipe, and frameworks like PyTorch.

AI is rapidly becoming an integral part of our lives, from tools like ChatGPT to autonomous vehicles. Yet, for many, it remains an abstract concept. This learning kit bridges that gap by offering hands-on projects that connect AI with hardware, making AI accessible to learners of all levels.

Project Overview

Based on our prior experience, we realized that a single project alone cannot possibly cover the breadth of knowledge needed to truly understand AI and hardware integration. As such, we expanded our approach to include a total of six projects organized into a seven-chapter book. The difficulty level gradually increases: the first two projects focus on simple, software-based tasks to introduce fundamental AI concepts, tools, and environment setup, while the remaining four projects add hardware interactions. These cover a range of components—something that lights up, something that makes sound, something that displays information, and even a robotic hand that moves.

The learning kit begins with foundational concepts such as numerical prediction and image classification, progressing to gesture and facial recognition, and culminating in advanced embedded controls. Each chapter is structured to introduce the core concept first, followed by an in-depth look at the theory applied behind each model, complete with illustrative examples. The step-by-step procedure then guides you through building a fully functional project, and at the end of each project, we include suggestions for extensions and applications, encouraging you to take what you’ve learned and go further. We believe in guiding you through each project, building your understanding of the theory and skills, and then letting you extend and beyond your creativity.

Why Project-Based Learning Matters

Project-based learning isn’t just about acquiring skills—it’s about solving problems and building confidence. Here’s why this approach works:

- Practical Skills: You’ll learn not only to write code but also to debug, test, and integrate systems.

- Creative Problem-Solving: Tackle challenges like hardware setup, AI model tuning, and system optimization.

- Portfolio Building: Each project you complete can become part of your portfolio, showcasing your skills to future employers or collaborators.

We’ve crafted a detailed tutorial book with step-by-step instructions for each project. Each project also includes a video walkthrough and GitHub open-source code, so you can jump right into creating and experimenting. If coding feels intimidating, don’t worry—just follow our instructions, and you’ll be able to build confidently at your own pace.

Our approach focuses on project-based learning, turning AI into an interactive, hands-on experience. You’ll tackle exciting projects like finger detection, hand gesture recognition, and image classification with CNNs, making complex AI concepts easier to grasp. Beyond the sample projects, we encourage you to innovate and create your own. Plus, our technical Discord community offers a space to share ideas, discuss challenges, and collaborate with others on your AI journey.

Chapter-by-Chapter Learning Overview

-

Introduction to AI & Programming Fundamentals

Students will grasp the foundational knowledge of AI applications and programming. Topics such as Python programming, data analysis using Pandas and Matplotlib, and setting up development environments build a strong base for technical projects. -

Machine Learning - Linear Regression

Learners will dive into machine learning basics, particularly linear regression. Applications like a housing price prediction project show practical use in data-driven decision-making. -

Image Classification with Convolutional Neural Networks

This chapter explains CNN architecture and offers hands-on projects like classifying dog and cat images. Such skills are useful for visual recognition tasks in industries like healthcare and robotics. -

Real-Time Finger Detection & Motion Tracking

Using computer vision libraries like MediaPipe and OpenCV, students will implement real-time motion detection systems, which are applicable in gesture-controlled devices and security systems. -

Screen Control by Hand Gesture Project

Students will build a system to control displays via hand gestures. This introduces gesture recognition systems relevant to user-interface designs in modern devices. -

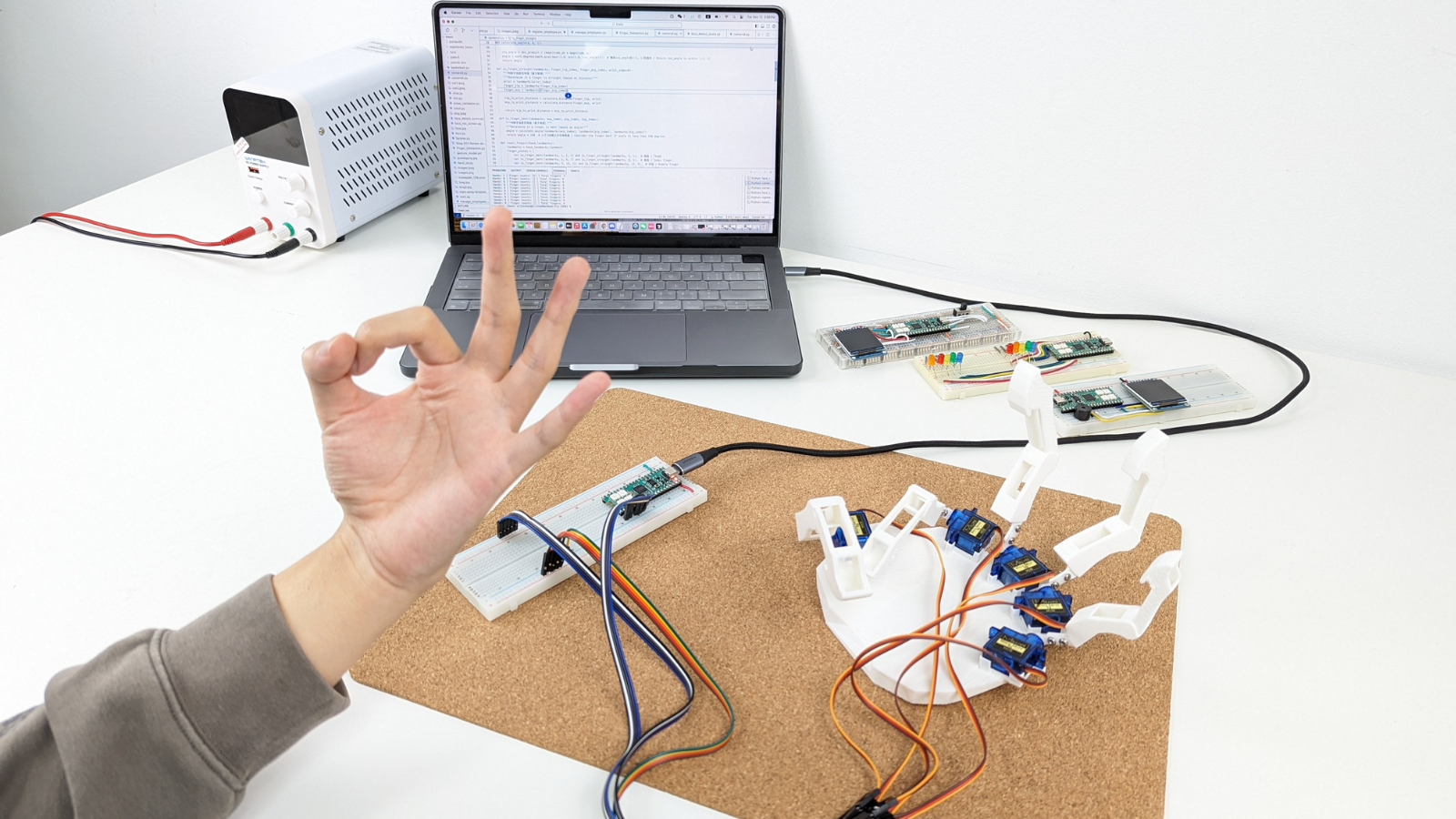

3D Printed Robotic Hand with Gesture Mimicry

A comprehensive project integrates AI, hardware, and servo motor control to create a robotic hand that mimics human gestures. Applications include assistive technologies and robotics research. -

Face Recognition and Advanced Gesture Projects

Exploring face registration and gesture systems with PyTorch, this chapter covers facial detection systems and multi-device communication, ideal for security and IoT applications.

Applications and Future Prospects

1. Applications of our AI Learning Kit:

-

-

Bridge Between Theory and Practice: Bridge theory and practice with hands-on AI projects like CNNs, gesture recognition, and hardware integration to solidify learning through real-world application.

- Enhancing Existing Curriculums: Our AI Kit aligns with university courses, offering hands-on modules like "Image Classification with CNNs" and "3D Printed Robotic Hand" to bridge theory and practice.

-

Flexible Learning: Our kit could complement universities' approaches by providing modular, scalable projects that students can tackle independently or in teams.

-

Bridge Between Theory and Practice: Bridge theory and practice with hands-on AI projects like CNNs, gesture recognition, and hardware integration to solidify learning through real-world application.

2. Leverage for Learning:

-

- Students gain a career edge by combining hardware skills with AI programming through our kit, while real-world examples enhance ethical AI discussions in curricula.

By offering pre-structured projects and a focus on integrating AI into hardware, the learning kit fills gaps in traditional curriculums and encourages a deeper, project-based understanding of AI concepts.

Future Prospects:

Many universities worldwide are increasingly integrating AI and hardware-based projects into their curriculums, especially within engineering and computer science programs. Topics such as machine learning, computer vision, robotics, and real-time AI applications are now central to many educational initiatives.

University Approaches: AI Literacy: Universities like the University of Florida focus on AI literacy by combining theory with hands-on applications, addressing ethical issues, and preparing students for careers in emerging technologies.

Project-Based Learning: Many institutions use project-based learning (PBL) to teach AI, robotics, and machine learning. This method allows students to work on real-world problems, such as housing price predictions or gesture-controlled systems, to apply AI concepts practically.

Human-Centered AI: Programs often highlight the ethical implications of AI, encouraging students to design solutions that are inclusive, transparent, and responsible

Industrial Robotics: Gesture-controlled robotic hands and face recognition systems can revolutionize industrial automation and collaborative robots.

Healthcare Technology: Finger detection and facial recognition algorithms find applications in monitoring patient vitals and enhancing accessibility tools.

Security Systems: Motion detection and image classification projects are crucial for surveillance and threat detection technologies.

IoT Innovations: Multi-device communication projects enable smart ecosystems with real-time control and monitoring capabilities.

1) The BOOK: The book, Tutorial Video, Source Code (GitHub): priced at $99 save $20, after campaign $119.

2) The KIT: The book, Tutorial Video, Source Code (GitHub) + Electronics Components: priced at $159 save $30 now, after campaign $189.

Electronics component: Breadboard, STEPico (soldered), type-C cable, 15 LEDs, 20 Jumper wires, LCD screen (soldered), servo motor, buzzer, battery box.

3) The LAB: The book, Tutorial Video, Source Code (GitHub) + Electronics Components + LOTG: 409, save $80 now, after campaign $489.

We are excited to announce that we will have a refined version of this device, known as MEGO2.0. If you are choosing any kits with LOTG, that will be automatically come with MEGO2.0.

4) The Collection: The book, Tutorial Video, Source Code (GitHub) + Electronics Components +LOTG + Other learning kit: $859, save $110 now, after campaign $969.